Alibaba researchers unveil Marco-o1, an LLM with advanced reasoning capabilities

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

The recent release of OpenAI o1 has brought great attention to large reasoning models (LRMs), and is inspiring new models aimed at solving complex problems classic language models often struggle with. Building on the success of o1 and the concept of LRMs, researchers at Alibaba have introduced Marco-o1, which enhances reasoning capabilities and tackles problems with open-ended solutions where clear standards and quantifiable rewards are absent.

OpenAI o1 uses “inference-time scaling” to improve the model’s reasoning ability by giving it “time to think.” Basically, the model uses more compute cycles during inference to generate more tokens and review its responses, which improves its performance on tasks that require reasoning. o1 is renowned for its impressive reasoning capabilities, especially in tasks with standard answers such as mathematics, physics and coding.

However, many applications involve open-ended problems that lack clear solutions and quantifiable rewards. “We aimed to push the boundaries of LLMs even further, enhancing their reasoning abilities to tackle complex, real-world challenges,” Alibaba researchers write.

Marco-o1 is a fine-tuned version of Alibaba’s Qwen2-7B-Instruct that integrates advanced techniques such as chain-of-thought (CoT) fine-tuning, Monte Carlo Tree Search (MCTS) and reasoning action strategies.

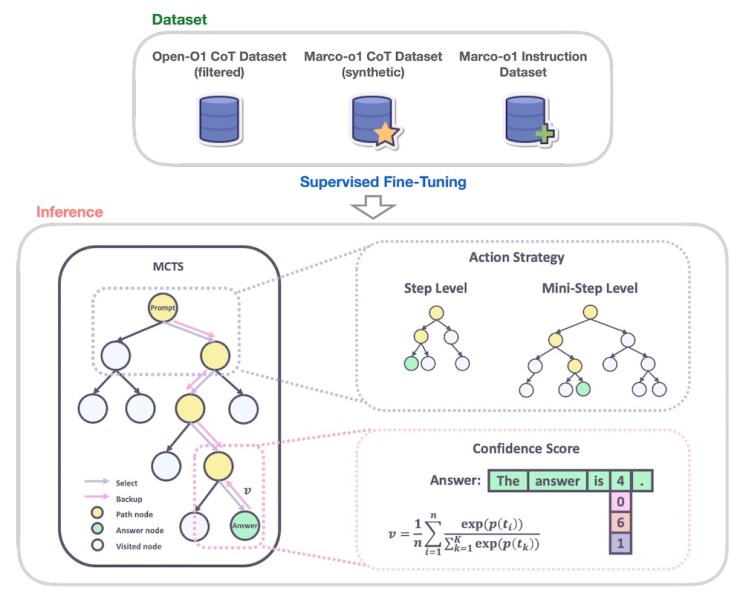

The researchers trained Marco-o1 on a combination of datasets, including the Open-O1 CoT dataset; the Marco-o1 CoT dataset, a synthetic dataset generated using MCTS; and the Marco-o1 Instruction dataset, a collection of custom instruction-following data for reasoning tasks.

MCTS is a search algorithm that has proven to be effective in complex problem-solving scenarios. It intelligently explores different solution paths by repeatedly sampling possibilities, simulating outcomes and gradually building a decision tree. It has proven to be very effective in complex AI problems, such as beating the game Go.

Marco-o1 leverages MCTS to explore multiple reasoning paths as it generates response tokens. The model uses the confidence scores of candidate response tokens to build its decision tree and explore different branches. This enables the model to consider a wider range of possibilities and arrive at more informed and nuanced conclusions, especially in scenarios with open-ended solutions. The researchers also introduced a flexible reasoning action strategy that allows them to adjust the granularity of MCTS steps by defining the number of tokens generated at each node in the tree. This provides a tradeoff between accuracy and computational cost, giving users the flexibility to balance performance and efficiency.

Another key innovation in Marco-o1 is the introduction of a reflection mechanism. During the reasoning process, the model periodically prompts itself with the phrase, “Wait! Maybe I made some mistakes! I need to rethink from scratch.” This causes the model to re-evaluate its reasoning steps, identify potential errors and refine its thought process.

“This approach allows the model to act as its own critic, identifying potential errors in its reasoning,” the researchers write. “By explicitly prompting the model to question its initial conclusions, we encourage it to re-express and refine its thought process.”

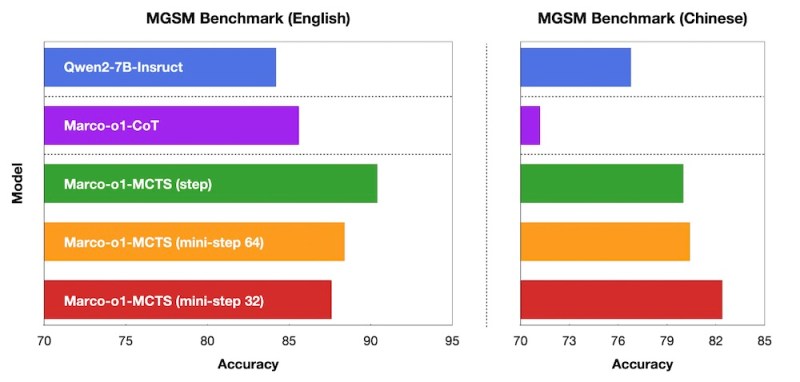

To evaluate the performance of Marco-o1, the researchers conducted experiments on several tasks, including the MGSM benchmark, a dataset for multi-lingual grade school math problems. Marco-o1 significantly outperformed the base Qwen2-7B model, particularly when the MCTS component was adjusted for single-token granularity.

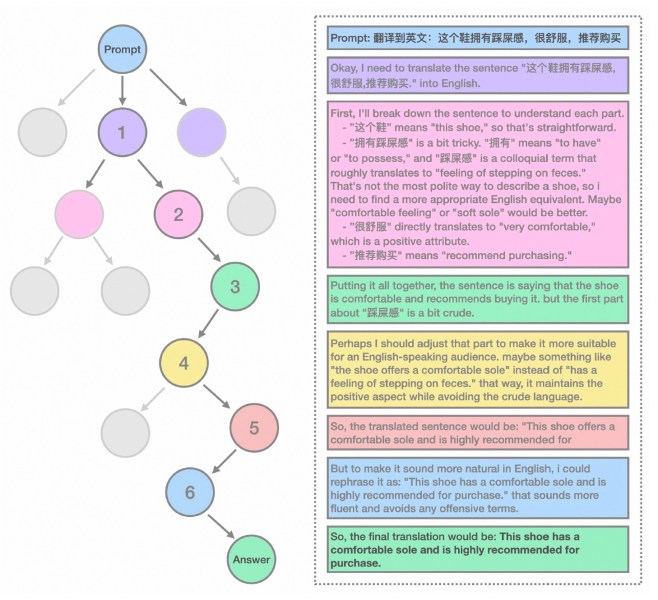

However, the primary objective of Marco-o1 was to address the challenges of reasoning in open-ended scenarios. To this end, the researchers tested the model on translating colloquial and slang expressions, a task that requires understanding subtle nuances of language, culture and context. The experiments showed that Marco-o1 was able to capture and translate these expressions more effectively than traditional translation tools. For instance, the model correctly translated a colloquial expression in Chinese, which literally means, “This shoe offers a stepping-on-poop sensation”, into the English equivalent, “This shoe has a comfortable sole.” The reasoning chain of the model shows how it evaluates different potential meanings and arrives at the correct translation.

This paradigm can prove to be useful for tasks such as product design and strategy, which require deep and contextual understanding and do not have well-defined benchmarks and metrics.

A new wave of reasoning models

Since the release of o1, AI labs are racing to release reasoning models. Last week, Chinese AI lab DeepSeek released R1-Lite-Preview, its o1 competitor, which is currently only available through the company’s online chat interface. R1-Lite-Preview reportedly beats o1 on several key benchmarks.

The open source community is also catching up with the private model market, releasing models and datasets that take advantage of inference-time scaling laws. The Alibaba team released Marco-o1 on Hugging Face along with a partial reasoning dataset that researchers can use to train their own reasoning models. Another recently released model is LLaVA-o1, developed by researchers from multiple universities in China, which brings the inference-time reasoning paradigm to open-source vision language models (VLMs).

The release of these models comes amidst uncertainty about the future of model scaling laws. Various reports indicate that the returns on training larger models are diminishing and might be hitting a wall. But what’s for certain is that we are just beginning to explore the possibilities of inference-time scaling.

Source link