Anthropic’s new AI tools promise to simplify prompt writing and boost accuracy by 30%

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

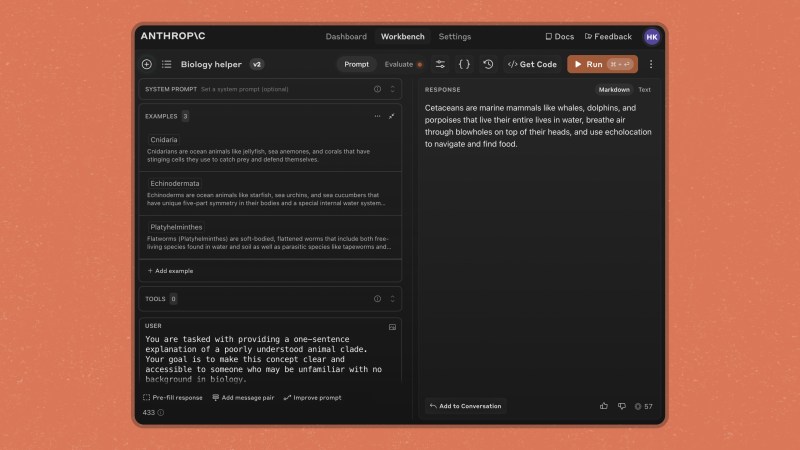

Anthropic has launched a new suite of tools designed to automate and improve prompt engineering in its developer console, a move expected to enhance the efficiency of enterprise AI development. The new features, including a “prompt improver” and advanced example management, aim to help developers create more reliable AI applications by refining the instructions—known as prompts—that guide AI models like Claude in generating responses.

At the core of these updates is the Prompt Improver, a tool that applies best practices in prompt engineering to automatically refine existing prompts. This feature is especially valuable for developers working across different AI platforms, as prompt engineering techniques can vary between models. Anthropic’s new tools aim to bridge that gap, allowing developers to adapt prompts originally designed for other AI systems to work seamlessly with Claude.

“Writing effective prompts remains one of the most challenging aspects of working with large language models,” said Hamish Kerr, product lead at Anthropic, in an exclusive interview with VentureBeat. “Our new prompt improver directly addresses this pain point by automating the implementation of advanced prompt engineering techniques, making it significantly easier for developers to achieve high-quality results with Claude.” Kerr added that the tool is particularly beneficial for developers migrating workloads from other AI providers, as it “automatically applies best practices that might otherwise require extensive manual refinement and deep expertise with different model architectures.”

Anthropic’s new tools directly respond to the growing complexity of prompt engineering, which has become a critical skill in AI development. As companies increasingly rely on AI models for tasks like customer service and data analysis, the quality of prompts plays a key role in determining how well these systems perform. Poorly written prompts can lead to inaccurate outputs, making it difficult for enterprises to trust AI in crucial workflows.

The Prompt Improver enhances prompts through multiple techniques, including chain-of-thought reasoning, which instructs Claude to tackle problems step by step before generating a response. This method can significantly boost the accuracy and reliability of outputs, particularly for complex tasks. The tool also standardizes examples in prompts, rewrites ambiguous sections, and adds prefilled instructions to better guide Claude’s responses.

“Our testing shows significant improvements in accuracy and consistency,” Kerr said, noting that the prompt improver increased accuracy by 30% in a multilabel classification test and achieved 100% adherence to word count in a summarization task.

AI training made simple: Inside Anthropic’s new example management system

Anthropic’s new release also includes an example management feature, which allows developers to manage and edit examples directly in the Anthropic Console. This feature is particularly useful for ensuring Claude follows specific output formats, a necessity for many business applications that require consistent and structured responses. If a prompt lacks examples, developers can use Claude to generate synthetic examples automatically, further simplifying the development process.

“Humans and Claude alike learn very well from examples,” Kerr explained. “Many developers use multi-shot examples to demonstrate ideal behavior to Claude. The prompt improver will use the new chain-of-thought section to take your ideal inputs/outputs and ‘fill in the blanks’ between the input and output with high-quality reasoning to show the model how it all fits together.”

Anthropic’s release of these tools comes at a pivotal time for enterprise AI adoption. As businesses increasingly integrate AI into their operations, they face the challenge of fine-tuning models to meet their specific needs. Anthropic’s new tools aim to ease this process, enabling enterprises to deploy AI solutions that work reliably and efficiently right out of the box.

Anthropic’s focus on feedback and iteration allows developers to refine prompts and request changes, such as shifting output formats from JSON to XML, without the need for extensive manual intervention. This flexibility could be a key differentiator in the competitive AI landscape, where companies like OpenAI and Google are also vying for dominance.

Kerr pointed to the tool’s impact on enterprise-level workflows, particularly for companies like Kapa.ai, which used the prompt improver to migrate critical AI workflows to Claude. “Anthropic’s prompt improver streamlined our migration to Claude 3.5 Sonnet and enabled us to get to production faster,” said Finn Bauer, co-founder of Kapa.ai, in a statement.

Beyond better prompts: Anthropic’s master plan for enterprise AI dominance

Beyond improving prompts, Anthropic’s latest tools signal a broader ambition: securing a leading role in the future of enterprise AI. The company has built its reputation on responsible AI, championing safety and reliability—two pillars that align with the needs of businesses navigating the complexities of AI adoption. By lowering the barriers to effective prompt engineering, Anthropic is helping enterprises integrate AI into their most critical operations with fewer headaches.

“We’re delivering quantifiable improvements—like a 30% boost in accuracy—while giving technical teams the flexibility to adapt and refine as needed,” said Kerr.

As competition in the enterprise AI space grows, Anthropic’s approach stands out for its practical focus. Its new tools don’t just help businesses adopt AI—they aim to make AI work better, faster, and more reliably. In a crowded market, that could be the edge enterprises are looking for.

Source link