ByteDance’s AI can make your photos act out movie scenes — but is it too real?

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

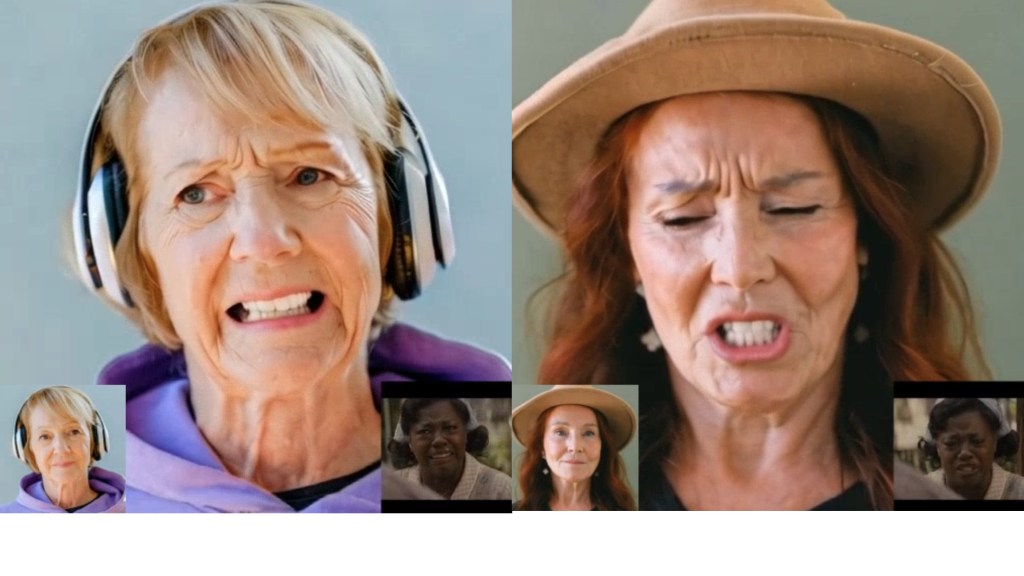

ByteDance has unveiled an artificial intelligence system that can transform any photograph into a convincing video performance, complete with subtle expressions and emotional depth that rival real footage. The Chinese technology giant, known for TikTok, designed its “X-Portrait 2” system to make still images mirror scenes from famous movies — with results so realistic they blur the line between authentic and artificial content.

The system’s demonstrations showcase still photos performing iconic scenes from films like “The Shining,” “Face Off,” and “Fences,” capturing every nuanced expression from the original performances. A single photograph can now display fear, rage, or joy with the same convincing detail as a trained actor, while maintaining the original person’s identity and characteristics.

This breakthrough arrives at a crucial moment. As society grapples with digital misinformation and the aftermath of the U.S. presidential election, X-Portrait 2’s ability to create indistinguishable-from-reality videos from any photograph raises serious concerns. Previous AI animation tools produced obviously artificial results with mechanical movements. But ByteDance’s new system captures the natural flow of facial muscles, subtle eye movements, and complex expressions that make human faces uniquely expressive.

ByteDance achieved this realism through an innovative approach. Instead of tracking specific points on a face — the standard method used by most animation software — the system observes and learns from complete facial movements. Where older systems created expressions by connecting dots, X-Portrait 2 captures the fluid motion of an entire face, even during rapid speech or when viewed from different angles.

TikTok’s billion-user database: The secret behind ByteDance’s AI breakthrough

ByteDance’s advantage stems from its unique position as owner of TikTok, which processes over a billion user-generated videos daily. This massive collection of facial expressions, movements, and emotions provides training data at a scale unavailable to most AI companies. While competitors rely on limited datasets or synthetic data, ByteDance can fine-tune its AI models using real-world expressions captured across diverse faces, lighting conditions, and camera angles.

The release of X-Portrait 2 coincides with ByteDance’s expansion of AI research beyond China. The company is establishing new research centers in Europe, with potential locations in Switzerland, the UK, and France. A planned $2.13 billion AI center in Malaysia and collaboration with Tsinghua University suggest a strategy to build AI expertise across multiple continents.

This global research push comes at a critical moment. While ByteDance faces regulatory scrutiny in Western markets — including Canada’s recent order for TikTok to cease operations and ongoing U.S. debates about restrictions — the company continues to advance its technical capabilities.

Hollywood’s next revolution: How AI could replace million-dollar motion capture

The implications for the animation industry extend beyond technical achievements. Major studios currently spend millions on motion capture equipment and employ hundreds of animators to create realistic facial expressions. X-Portrait 2 suggests a future where a single photographer and a reference video could replace much of this infrastructure.

This shift arrives amid growing debate about AI-generated content and digital rights. While competitors have rushed to release their code publicly, ByteDance has kept X-Portrait 2’s implementation private — a decision that reflects increasing awareness of how AI tools can be misused to create unauthorized performances or misleading content.

ByteDance’s focus on human movement and expression marks a distinct path from other AI companies. While firms like OpenAI and Anthropic concentrate on language processing, ByteDance builds on its core strength: understanding how people move and express themselves on camera. This specialization emerges directly from TikTok’s years of analyzing dance trends and facial expressions.

This emphasis on human motion could prove more significant than current market analysis suggests. As work and socializing increasingly move into virtual spaces, technology that accurately captures and transfers human emotion becomes crucial. ByteDance’s advances position it to influence how people will interact in digital environments, from business meetings to entertainment.

AI security concerns: When digital faces need digital locks

The October dismissal of a ByteDance intern for allegedly interfering with AI model training highlighted an often-overlooked aspect of AI development: internal security. As models become more sophisticated, protecting them from tampering grows increasingly critical.

The technology arrives as demand for AI-generated video content rises across entertainment, education, and business communication. While X-Portrait 2 demonstrates significant technical progress in maintaining consistent identity while transferring nuanced expressions, it also raises questions about authentication and verification of AI-generated content.

As Western governments scrutinize Chinese technology companies, ByteDance’s advances in AI animation present a complex reality: innovation knows no borders, and the future of how we interact online may be shaped by technologies developed far from Silicon Valley.

Source link