Alchemist’s latest batch puts AI to work as accelerator expands to Tokyo, Doha

Alchemist Accelerator has a new pile of AI-forward companies demoing their wares today, if you care to watch, and the program itself is making some international moves into Tokyo and Doha. Read on for our picks of the batch.

Chatting with Alchemist CEO and founder Ravi Belani ahead of demo day (today at 10:30 a.m. Pacific) about this cohort, it was clear that ambitions for AI startups have contracted, and that’s not a bad thing.

No early-stage startup today is at all likely to become the next OpenAI or Anthropic — their lead is too huge right now in the domain of foundational large language models.

“The cost of building a basic LLM is prohibitively high; you get into the hundreds of millions of dollars just to get it out. The question is, as a startup, how do you compete?” Belani said. “VCs don’t want wrappers around LLMs. We’re looking for companies where there’s a vertical play, where they own the end user and there’s a network effect and lock-in over time.”

That was also my read, as the companies selected for this group are all highly specific in their applications, using AI but solving for a specific problem in a specific domain.

An example of this is healthcare, where AI models for assisting diagnosis, planning care and so on are increasingly but still cautiously being tested out. The specter of liability and bias hang heavy over this heavily regulated industry, but there are also lots of legacy processes that could be replaced with real, tangible benefit.

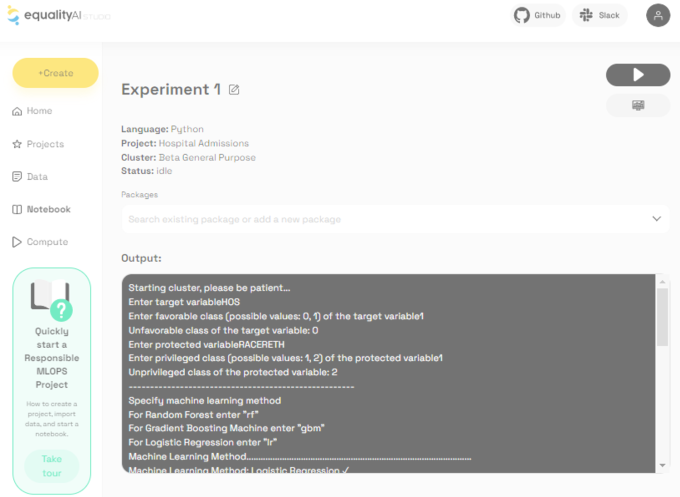

Equality AI isn’t trying to revolutionize cancer care or anything — the goal is to ensure that the models being put to work don’t fall afoul of important non-discrimination protections in AI regulation. This is a serious risk, because if your care or diagnosis model were found to exhibit bias against a protected class (for instance assigning a higher risk to a Muslim or a queer person), that could sink the product and open you up to lawsuits.

Do you want to trust the model maker or vendor? Or do you want a disinterested (in its original sense, of having no conflicting interest) specialist who knows the ins and outs of the policies, and also how to evaluate a model properly?

“We all deserve the right to trust that the AI behind the medical curtain is safe and effective,” CEO and founder Maia Hightower told TechCrunch. “Healthcare leaders are struggling to keep up with the complex regulatory environment and rapidly changing AI technology. In the next couple of years, AI compliance and litigation risk will continue to grow, driving the widespread adoption of responsible AI practices in healthcare. The risk of non-compliance and penalties as stiff as loss of certification makes our solution very timely.”

It’s a similar story for Cerevox, which is working on eliminating hallucinations and other errors from today’s LLMs. But not just in a general sense: They work with companies to structure their data pipelines and structures so that these bad habits of AI models can be minimized and observed. It’s not about keeping ChatGPT from making up a physicist when you ask it about a non-existent discovery in the 1800s, it’s about preventing a risk evaluation engine from extrapolating from data in a column that should exist but doesn’t.

They’re working with fintech and insuretech companies first, which Belani acknowledged is “an unsexy use case, but it’s a path to build out a product.” A path with paying customers, which is, you know, how you start a business.

Quickr Bio is building on top of the new world of biotech being built on the back of Crispr-Cas9 gene editing, which brings with it new risks as well as new opportunities. How do you verify that the edits you’re making are the right ones? Being 99% sure isn’t enough (again, regulations and liability), but testing to raise your confidence can be time-consuming and expensive. Quickr claims its method of quantifying and understanding the actual modifications made (as opposed to theoretical — ideally these are identical) is up to 100 times faster than existing methods.

In other words, they’re not creating a new paradigm, just aiming to be the best solution for empowering the existing one. If they can show even a significant percentage of their claimed efficacy they could be a must-have in many labs.

You can check out the rest of the cohort here — you’ll see the above-mentioned are representative of the vibe. Demos commence at 10:30 a.m. Pacific.

As for the program itself, it’s getting some serious buy-in for programs in Tokyo and Doha.

“We think it’s an inflection point in Japan, it’s going to be an exciting place to source stories from and for companies to come to,” Belani said. A recent change to tax policy should free up early-stage capital at startups, and investment slipping out of China is landing in Japan, particularly Tokyo, where he expects a new (or rather refurbished) tech center to emerge. The fact that OpenAI is building out a satellite there is actually, he suggested, all you need to know.

Mitsubishi is investing through some arm or another, and the Japan External Trade Organization is involved as well. I’ll certainly be interested to see what the awakened Japanese startup economy produces.

Alchemist Doha is getting a $13 million commitment from the government, with an interesting twist.

“The mandate there is focusing on emerging market founders, the 90% of the world orphaned by where a lot of tech innovation is occurring,” Belani said. “We have found that some of the best companies in the U.S. are not from the U.S. There’s something about having an outside perspective that creates amazing companies. There’s also a lot of instability out there and this talent needs a home.”

He noted that they’ll be making bigger investments, from $200,000 to $1 million, out of this program, which may change the type of companies that take part.