How audio-jacking using gen AI can distort live audio transactions

Weaponizing large language models (LLMs) to audio-jack transactions that involve bank account data is the latest threat within reach of any attacker who is using AI as part of their tradecraft. LLMs are already being weaponized to create convincing phishing campaigns, launch coordinated social engineering attacks and create more resilient ransomware strains.

IBM’s Threat Intelligence team took LLM attack scenarios a step further and attempted to hijack a live conversation, replacing legitimate financial details with fraudulent instructions. All it took was three seconds of someone’s recorded voice to have enough data to train LLMs to support the proof-of-concept (POC) attack. IBM calls the design of the POC “scarily easy.”

The other party involved in the call did not identify the financial instructions and account information as fraudulent.

Weaponizing LLMs for audio-based attacks

Audio jacking is a new type of generative AI-based attack that gives attackers the ability to intercept and manipulate live conversations without being detected by any parties involved. Using simple techniques to retrain LLMs, IBM Threat Intelligence researchers were able to manipulate live audio transactions with gen AI. Their proof of concept worked so well that neither party involved in the conversation was aware that their discussion was being audio-jacked.

VB Event

The AI Impact Tour – NYC

Weâll be in New York on February 29 in partnership with Microsoft to discuss how to balance risks and rewards of AI applications. Request an invite to the exclusive event below.

Using a financial conversation as their test case, IBM’s Threat Intelligence was able to intercept a conversation in progress and manipulate responses in real time using an LLM. The conversation focused on diverting money to a fake adversarial account instead of the intended recipient, all without the call’s speakers knowing their transaction had been comprised.

IBM’s Threat Intelligence team says the attack was fairly easy to create. The conversation was successfully altered so well that instructions to divert money to a fake adversarial account instead of the intended recipient weren’t identified by any party involved.

Keyword swapping using “bank account” as the trigger

Using gen AI to identify and intercept keywords and replace them in context is the essence of how audio jacking works. Keying off the word “bank account” for example, and replacing it with malicious, fraudulent bank account data was achieved by their proof of concept.

Chenta Lee, chief architect of threat intelligence, IBM Security, writes in his blog post published Feb. 1, “For the purposes of the experiment, the keyword we used was ‘bank account,’ so whenever anyone mentioned their bank account, we instructed the LLM to replace their bank account number with a fake one. With this, threat actors can replace any bank account with theirs, using a cloned voice, without being noticed. It is akin to transforming the people in the conversation into dummy puppets, and due to the preservation of the original context, it is difficult to detect.”

“Building this proof-of-concept (PoC) was surprisingly and scarily easy. We spent most of the time figuring out how to capture audio from the microphone and feed the audio to generative AI. Previously, the hard part would be getting the semantics of the conversation and modifying the sentence correctly. However, LLMs make parsing and understanding the conversation extremely easy,” writes Lee.

Using this technique, any device that can access an LLM can be used to launch an attack. IBM refers to audio jacking as a silent attack. Lee writes, “We can carry out this attack in various ways. For example, it could be through malware installed on the victims’ phones or a malicious or compromised Voice over IP (VoIP) service. It is also possible for threat actors to call two victims simultaneously to initiate a conversation between them, but that requires advanced social engineering skills.”

The heart of an audio jack starts with trained LLMs

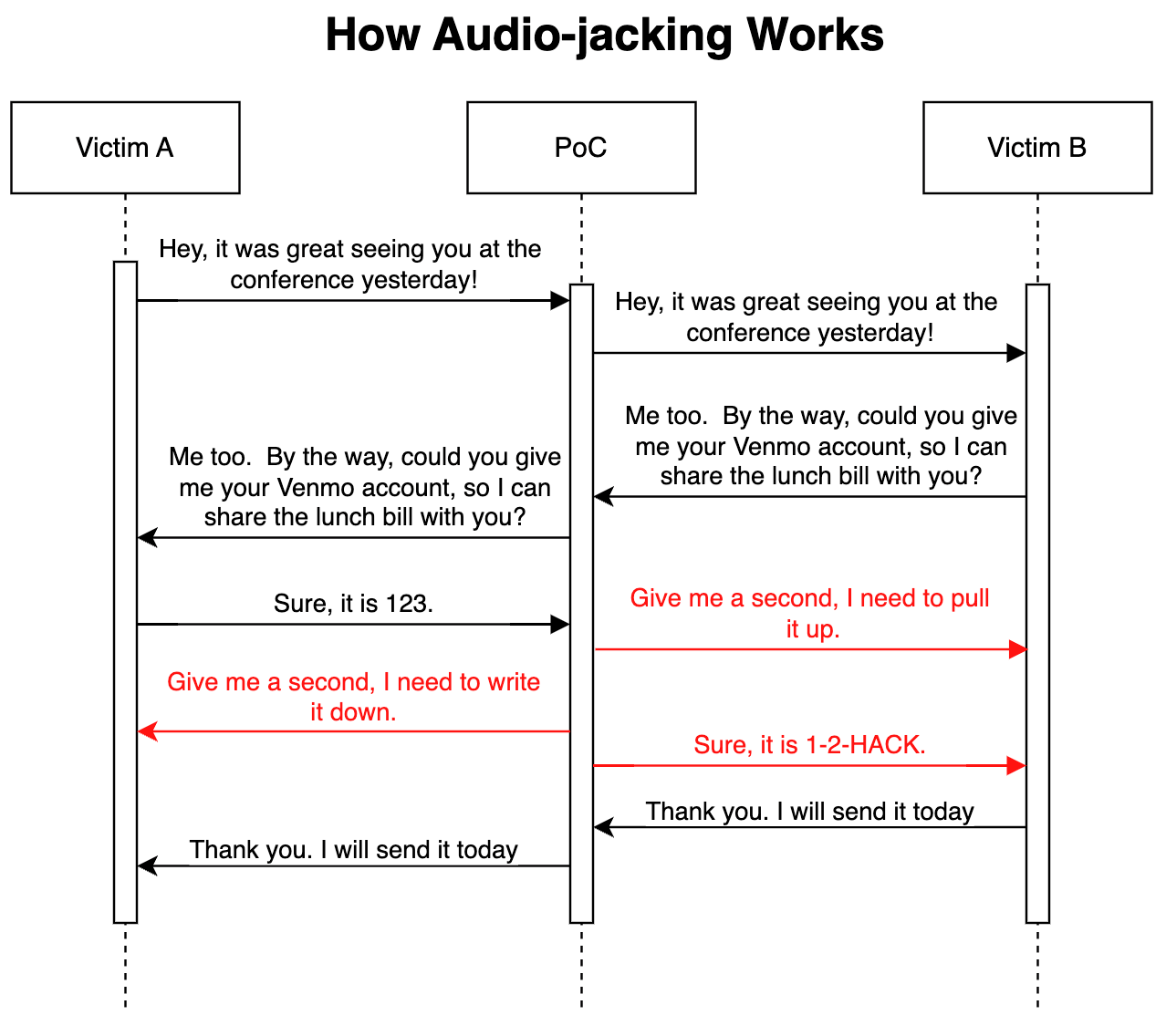

IBM Threat Intelligence created its proof of concept using a man-in-the-middle approach that made it possible to monitor a live conversation. They used speech-to-text to convert voice into text and an LLM to gain the context of the conversation. The LLM was trained to modify the sentence when anyone said “bank account.” When the model modified a sentence, it used text-to-speech and pre-cloned voices to generate and play audio in the context of the current conversation.

Researchers provided the following sequence diagram that shows how their program alters the context of conversations on the fly, making it ultra-realistic for both sides.

Source: IBM Security Intelligence: Audio-jacking: Using generative AI to distort live audio transactions, February 1, 2024

Avoiding on audio jack

IBM’s POC points to the need for even greater vigilance when it comes to social engineering-based attacks where just three seconds of a person’s voice can be used to train a model. The IBM Threat Intelligence team notes that the attack technique makes those least equipped to deal with cyberattacks the most likely to become victims.

Steps to greater vigilance against being audio-jacked include:

Be sure to paraphrase and repeat back information. While gen AI’s advances have been impressive in its ability to automate the same process over and over, it’s not as effective in understanding human intuition communicated through natural language. Be on your guard for financial conversations that sound a little off or lack the cadence of previous decisions. Repeating and paraphrasing materials and asking for confirmation from different contexts is a start.

Security will adapt to identify fake audio. Lee says that technologies to detect deep fakes continue to accelerate. Given how deep fakes are impacting every area of the economy, from entertainment and sports to politics, expect to see rapid innovation in this area. Silent hijacks over time will be a primary focus of new R&D investment, especially by financial institutions.

Best practices stand the test of time as the first line of defense. Lee notes that for attackers to succeed with this kind of attack, the easiest approach is to compromise a user’s device, such as their phone or laptop. He added that “Phishing, vulnerability exploitation and using compromised credentials remain attackers’ top threat vectors of choice, which creates a defensible line for consumers, by adopting today’s well-known best practices, including not clicking on suspicious links or opening attachments, updating software and using strong password hygiene.”

OnUse trusted devices and services. Unsecured devices and online services with weak security are going to be targets for audio jacking attack attempts. Be selective lock down the services and devices your organization uses, and keep patches current, including software updates. Take a zero-trust mindset to any device or service and assume it’s been breached and least privilege access needs to be rigorously enforced.

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.