New transformer architecture can make language models faster and resource-efficient

Are you ready to bring more awareness to your brand? Consider becoming a sponsor for The AI Impact Tour. Learn more about the opportunities here.

Large language models like ChatGPT and Llama-2 are notorious for their extensive memory and computational demands, making them costly to run. Trimming even a small fraction of their size can lead to significant cost reductions.

To address this issue, researchers at ETH Zurich have unveiled a revised version of the transformer, the deep learning architecture underlying language models. The new design reduces the size of the transformer considerably while preserving accuracy and increasing inference speed, making it a promising architecture for more efficient language models.

Transformer blocks

Language models operate on a foundation of transformer blocks, uniform units adept at parsing sequential data, such as text passages.

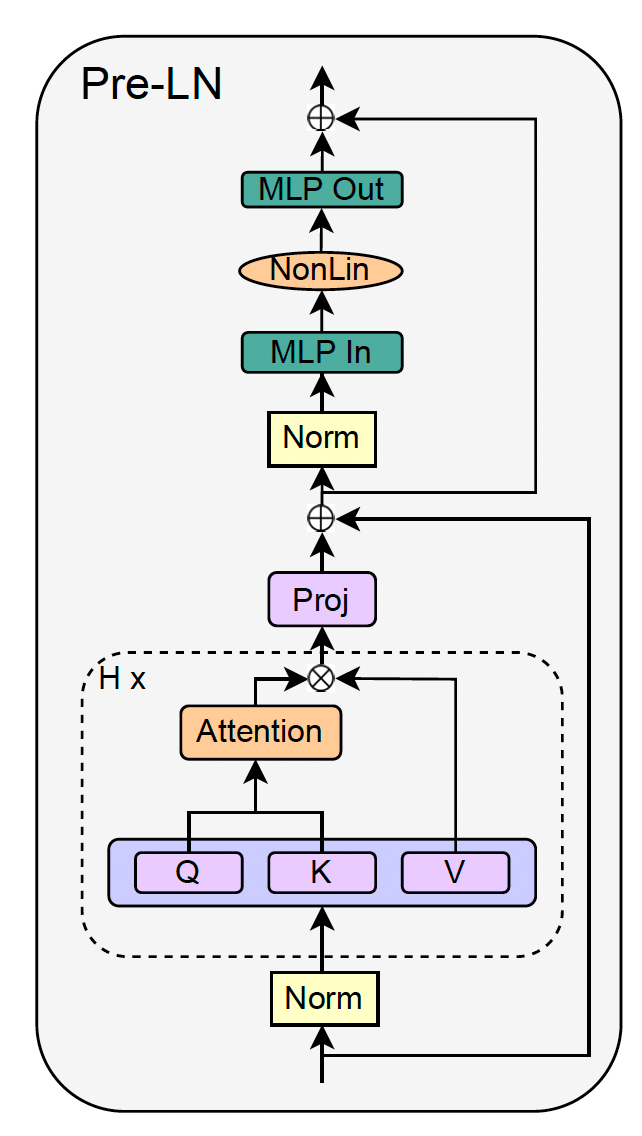

The transformer block specializes in processing sequential data, such as a passage of text. Within each block, there are two key sub-blocks: the “attention mechanism” and the multi-layer perceptron (MLP). The attention mechanism acts like a highlighter, selectively focusing on different parts of the input data (like words in a sentence) to capture their context and importance relative to each other. This helps the model determine how the words in a sentence relate, even if they are far apart.

VB Event

The AI Impact Tour

Connect with the enterprise AI community at VentureBeat’s AI Impact Tour coming to a city near you!

After the attention mechanism has done its work, the MLP, a mini neural network, further refines and processes the highlighted information, helping to distill the data into a more sophisticated representation that captures complex relationships.

Beyond these core components, transformer blocks are equipped with additional features such as “residual connections” and “normalization layers.” These components accelerate learning and mitigate issues common in deep neural networks.

As transformer blocks stack to constitute a language model, their capacity to discern complex relationships in training data grows, enabling the sophisticated tasks performed by contemporary language models. Despite the transformative impact of these models, the fundamental design of the transformer block has remained largely unchanged since its creation.

Making the transformer more efficient

“Given the exorbitant cost of training and deploying large transformer models nowadays, any efficiency gains in the training and inference pipelines for the transformer architecture represent significant potential savings,” write the ETH Zurich researchers. “Simplifying the transformer block by removing non-essential components both reduces the parameter count and increases throughput in our models.”

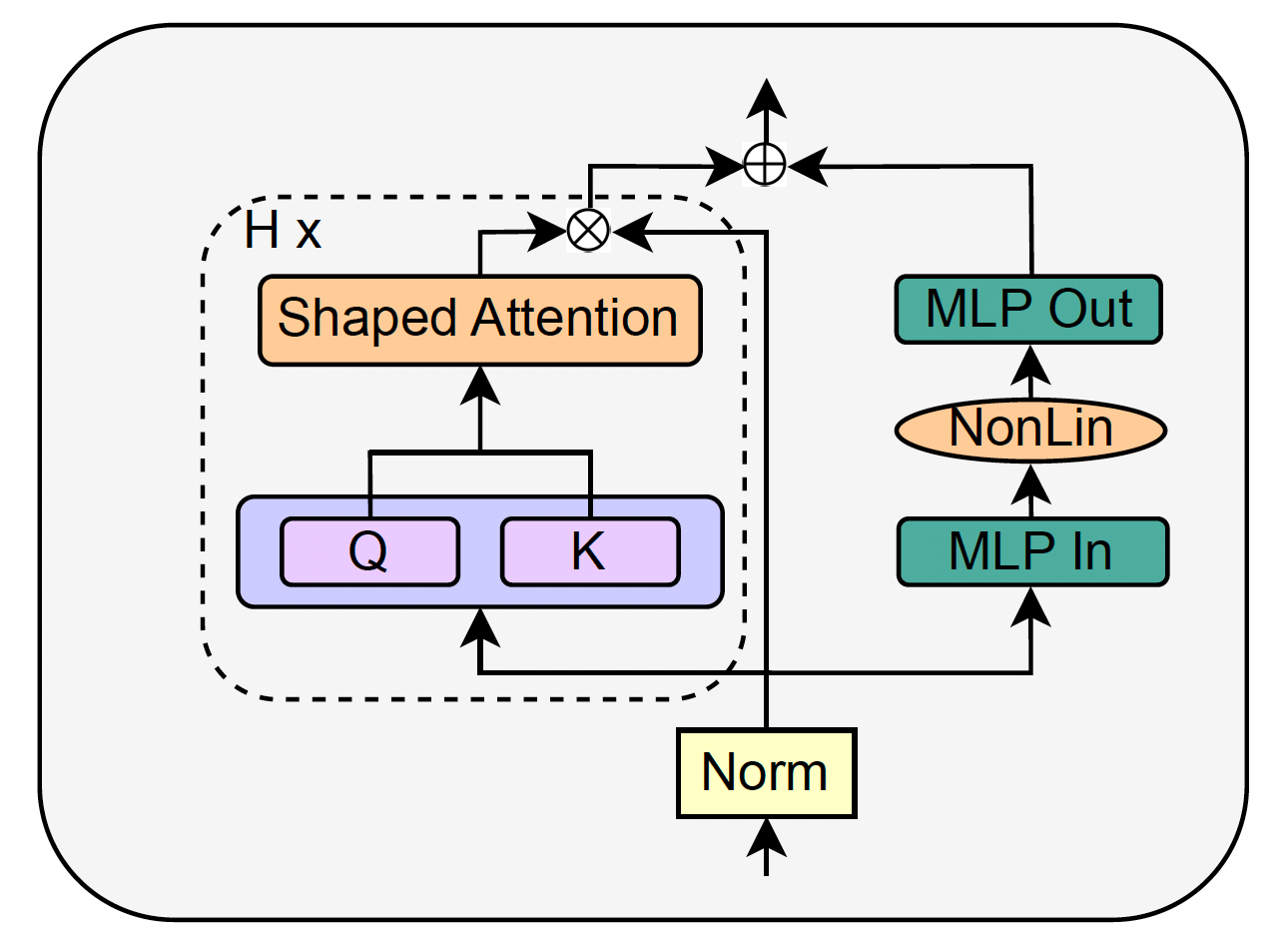

The team’s experiments demonstrate that paring down the transformer block does not compromise training speed or performance on downstream tasks. Standard transformer models feature multiple attention heads, each with its own set of key (K), query (Q), and value (V) parameters, which together map the interplay among input tokens. The researchers discovered that they could eliminate the V parameters and the subsequent projection layer that synthesizes the values for the MLP block, without losing efficacy.

Moreover, they removed the skip connections, which traditionally help avert the “vanishing gradients” issue in deep learning models. Vanishing gradients make training deep networks difficult, as the gradient becomes too small to effect significant learning in the earlier layers.

They also redesigned the transformer block to process attention heads and the MLP concurrently rather than sequentially. This parallel processing marks a departure from the conventional architecture.

To compensate for the reduction in parameters, the researchers adjusted other non-learnable parameters, refined the training methodology, and implemented architectural tweaks. These changes collectively maintain the model’s learning capabilities, despite the leaner structure.

Testing the new transformer block

The ETH Zurich team evaluated their compact transformer block across language models of varying depths. Their findings were significant: they managed to shrink the conventional transformer’s size by approximately 16% without sacrificing accuracy, and they achieved faster inference times. To put that in perspective, applying this new architecture to a large model like GPT-3, with its 175 billion parameters, could result in a memory saving of about 50 GB.

“Our simplified models are able to not only train faster but also to utilize the extra capacity that more depth provides,” the researchers write. While their technique has proven effective on smaller scales, its application to larger models remains untested. The potential for further enhancements, such as tailoring AI processors to this streamlined architecture, could amplify its impact.

“We believe our work can lead to simpler architectures being used in practice, thereby helping to bridge the gap between theory and practice in deep learning, and reducing the cost of large transformer models,” the researchers write.

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.