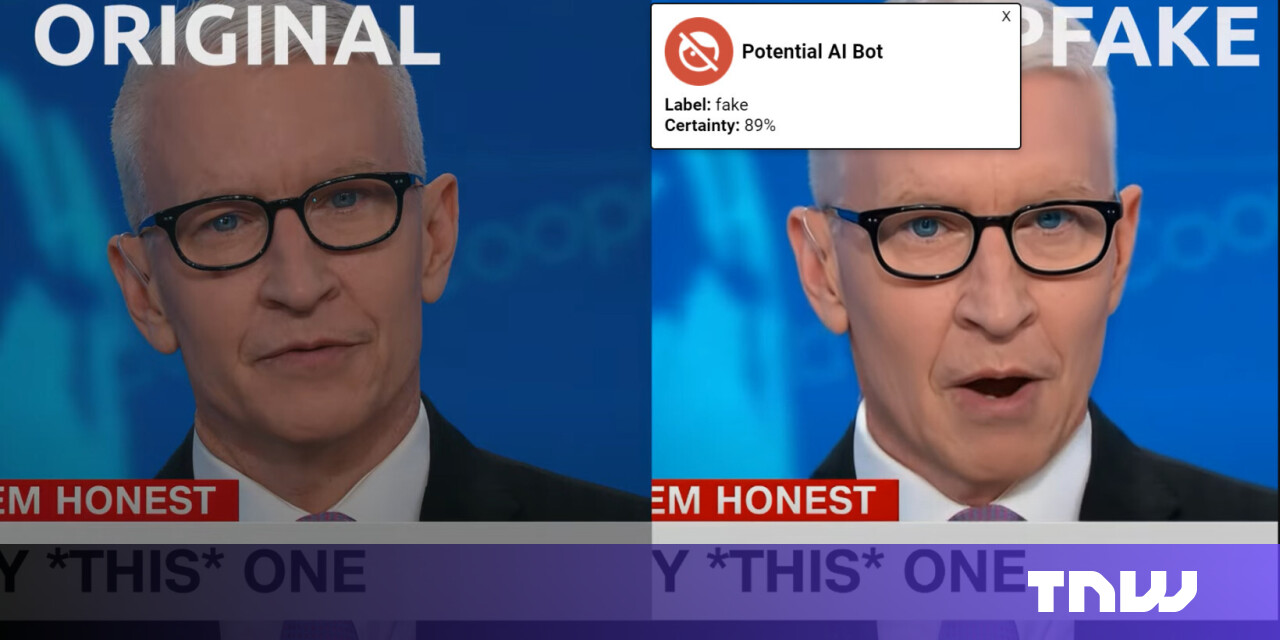

‘We may irreversibly lose control of autonomous AI,’ warn top academics

Open letters warning over AI’s risks are amassing — with top technologists and researchers sounding the alarm on uncontrollable development, pressing existential threats, and the lack of regulation.

Now, just a week before the AI Summit in London, a new letter calls on companies and governments to ensure the safe and ethical use of AI. The signatories include a number of European academics, three Turing Award winners, and even so-called AI godfathers Yoshua Bengio and Geoffrey Hinton.

Not coincidentally, last May, Hinton quit Google to freely speak about the looming dangers of artificial intelligence. To add to the dystopian atmosphere, a month earlier, Elon Musk warned that AI could lead to “civilisation destruction” and Google’s Sundar Pichai admitted the dangers “keep [him] up at night.”

In the letter, published on Tuesday, the signatories highlighted that while the technology’s capabilities can have an immensely positive impact on humanity, the lack of investment in safety and mitigating harms could have the exact opposite effect.

They noted that AI has already surpassed human abilities in certain domains, warning that “unforeseen abilities and behaviours” may emerge without explicit programming.

“Without sufficient caution, we may irreversibly lose control of autonomous AI systems, rendering human intervention ineffective,” reads the letter. This could lead to a series of worrisome and escalating dangers, ranging from cybercrime and social manipulation to large scale-loss of the biosphere and… extinction.

Given the stakes, the signatories are calling on companies to allocate at least one-third of their R&D budget to AI safety and ethics. They’re also urging governments to enforce standards and regulations and facilitate international cooperation in order to prevent recklessness and misuse.

The EU’s upcoming AI Act will be the world’s first AI-specific regulation that will seek to set a clear set of rules on the technology’s development. But despite the warnings, the business sector has voiced different concerns, fearing that regulation will stifle innovation.

Indeed, walking the fine line between AI regulation and progress seems to be one of the biggest challenges governments are facing today. But the difficulty presented doesn’t (and shouldn’t) mean that safety and governance are of secondary importance.

“To steer AI toward positive outcomes and away from catastrophe, we need to reorient. There is a responsible path, if we have the wisdom to take it,” conclude the academics.