Patronus AI conjures up an LLM evaluation tool for regulated industries

It turns out that when you put together two AI experts, both of whom formerly worked at Meta researching responsible AI, magic happens. The founders of Patronus AI came together last March to build a solution to evaluate and test large language models with an eye towards regulated industries where there is little tolerance for errors.

Rebecca Qian, who is CTO at the company, led responsible NLP research at Meta AI, while her cofounder CEO Anand Kannappan helped develop explainable ML frameworks at Meta Reality Labs. Today their startup is having a big day, launching from stealth, while making their product generally available, and also announcing a $3 million seed round.

The company is in the right place at the right time, building a security and analysis framework in the form of a managed service for testing large language models to identify areas that could be problematic, particularly the likelihood of hallucinations, where the model makes up an answer because it lacks the data to answer correctly.

“In our product we really seek to automate and scale the full process and model evaluation to alert users when we identify issues,” Qian told TechCrunch.

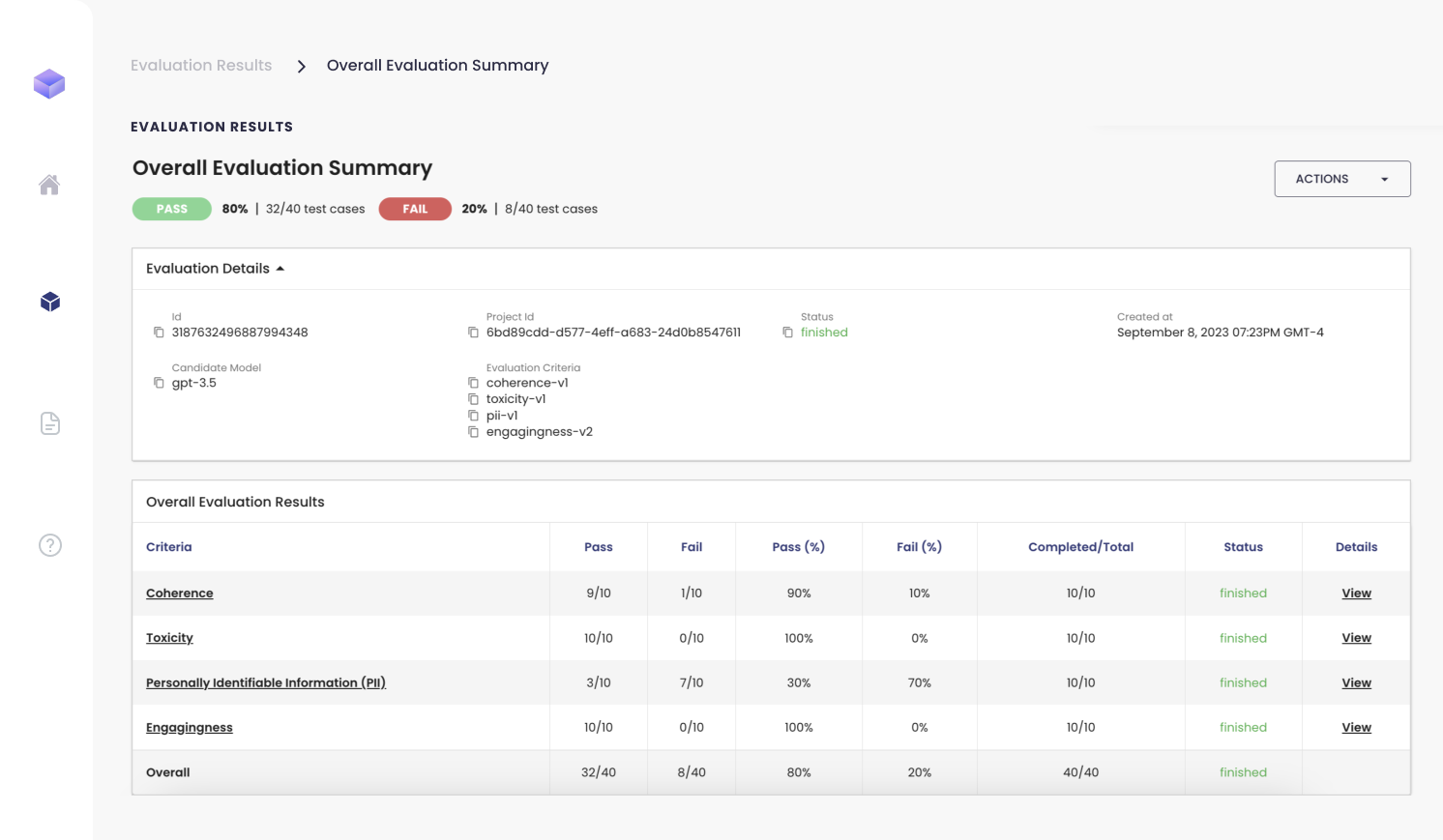

She says this involves three steps. “The first is scoring, where we help users actually score models in real world scenarios, such as finance looking at key criteria such as hallucinations,” she said. Next, the product builds test cases, meaning it automatically generates adversarial test suites and stress tests the models against these tests. Finally, it benchmarks models using various criteria, depending on the requirements, to find the best model for a given job. “We compare different models to help users identify the best model for their specific use case. So for example, one model might have a higher failure rate and hallucinations compared to a different base model,” she said.

Image Credits: Patronus AI

The company is concentrating on highly regulated industries where wrong answers could have big consequences. “We help companies make sure the large language models they’re using are safe. We detect instances where their models produce business-sensitive information and inappropriate outputs,” Kannappan explained.

He says the startup’s goal is to be a trusted third party when it comes to evaluating models. “It’s easy for someone to say their LLM is the best, but there needs to be an unbiased, independent perspective. That’s where we come in. Patronus is the credibility checkmark,” he said.

It currently has six full time employees, but they say given how quickly the space is growing, they plan to hire more people in the coming months without committing to an exact number. Qian says diversity is a key pillar of the company. “It’s something we care deeply about. And it starts at the leadership level at Patronus. As we grow, we intend to continue to institute programs and initiatives to make sure we’re creating and maintaining an inclusive workspace,” she said.

Today’s $3 million seed was led by Lightspeed Venture Partners with participation from Factorial Capital and other industry angels.