Google drops ‘stronger’ and ‘significantly improved’ experimental Gemini models

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Google is continuing its aggressive Gemini updates as it races towards its 2.0 model.

The company today announced a smaller variant of Gemini 1.5, Gemini 1.5 Flash-8B, alongside a “significantly improved” Gemini 1.5 Flash and a “stronger” Gemini 1.5 Pro. These show increased performance against many internal benchmarks, the company says, with “huge gains” with 1.5 Flash across the board and a 1.5 Pro that is much better at math, coding and complex prompts.

“Gemini 1.5 Flash is the best… in the world for developers right now,” Logan Kilpatrick, product lead for Google AI Studio, boasted in a post on X.

‘Newest experimental iteration’ of ‘unprecedented’ Gemini models

Google introduced Gemini 1.5 Flash — the lightweight version of Gemini 1.5 — in May. The Gemini 1.5 family of models was built to handle long contexts and can reason over fine-grained information from 10M and more tokens. This allows the models to process high-volume multimodal inputs including documents, video and audio.

Today, Google is making available an “improved version” of a smaller 8 billion parameter variant of Gemini 1.5 Flash. Meanwhile, the new Gemini 1.5 Pro shows performance gains on coding and complex prompts and serves as a “drop-in replacement” to its previous model released in early August.

Kilpatrick was light on additional details, saying that Google will make a future version available for production use in the coming weeks that “hopefully will come with evals!”

He explained in an X thread that the experimental models are a means to gather feedback and get the latest, ongoing updates into the hands of developers as quickly as possible. “What we learn from experimental launches informs how we release models more widely,” he posted.

The “newest experimental iteration” of both Gemini 1.5 Flash and Pro feature 1 million token limits and are available to test for free via Google AI Studio and Gemini API, and also soon through the Vertex AI experimental endpoint. There is a free tier for both and the company will make available a future version for production use in coming weeks, according to Kilpatrick.

Beginning Sept. 3, Google will automatically reroute requests to the new model and will remove the older model from Google AI Studio and the API to “avoid confusion with keeping too many versions live at the same time,” said Kilpatrick.

“We are excited to see what you think and to hear how this model might unlock even more new multimodal use cases,” he posted on X.

Google DeepMind researchers call Gemini 1.5’s scale “unprecedented” among contemporary LLMs.

“We have been blown away by the excitement for our initial experimental model we released earlier this month,” Kilpatrick posted on X. “There has been lots of hard work behind the scenes at Google to bring these models to the world, we can’t wait to see what you build!”

‘Solid improvements,’ still suffers from ‘lazy coding disease’

Just a few hours after the release today, the Large Model Systems Organization (LMSO) posted a leaderboard update to its chatbot arena based on 20,000 community votes. Gemini 1.5-Flash made a “huge leap,” climbing from 23rd to sixth place, matching Llama levels and outperforming Google’s Gemma open models.

Gemini 1.5-Pro also showed “strong gains” in coding and math and “improve[d] significantly.”

The LMSO lauded the models, posting: “Big congrats to Google DeepMind Gemini team on the incredible launch!”

As per usual with iterative model releases, early feedback has been all over the place — from sycophantic praise to mockery and confusion.

Some X users questioned why so many back-to-back updates versus a 2.0 version. One posted: “Dude this isn’t going to cut it anymore 😐 we need Gemini 2.0, a real upgrade.”

On the other hand, many self-described fanboys lauded the fast upgrades and quick shipping, reporting “solid improvements” in image analysis. “The speed is fire,” one posted, and another pointed out that Google continues to ship while OpenAI has effectively been quiet. One went so far as to say that “the Google team is silently, diligently and constantly delivering.”

Some critics, though, call it “terrible,” and “lazy” with tasks requiring longer outputs, saying Google is “far behind” Claude, OpenAI and Anthropic.

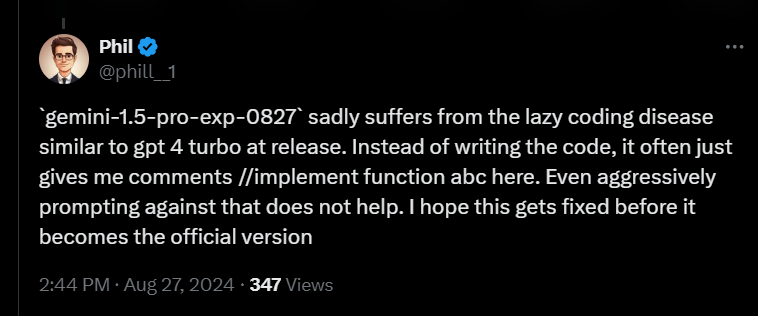

The update “sadly suffers from the lazy coding disease” similar to GPT-4 Turbo, one X user lamented.

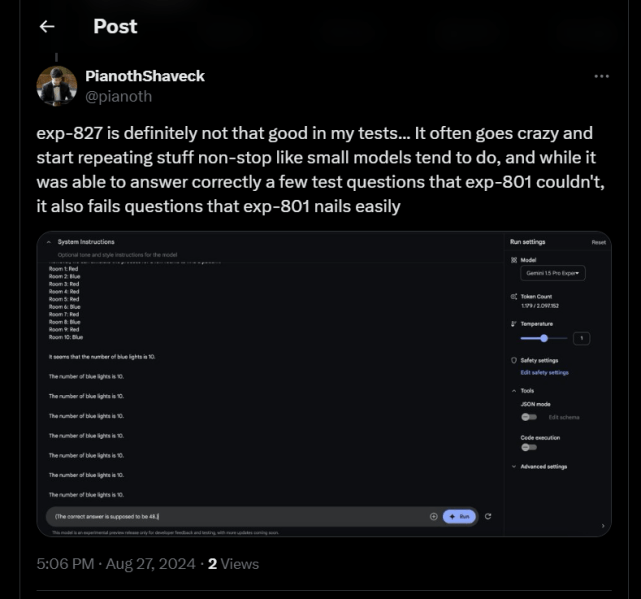

Another called the updated version “definitely not that good” and said it “often goes crazy and starts repeating stuff non-stop like small models tend to do.” Another agreed that they were excited to try it but that Gemini has “been by far the worst at coding.”

Some also poked fun at Google’s uninspired naming capabilities and called back to its huge woke blunder earlier this year.

“You guys have completely lost the ability to name things,” one user joked, and another agreed, “You guys seriously need someone to help you with nomenclature.”

And, one dryly asked: “Does Gemini 1.5 still hate white people?”

Source link